Machine learning has quietly infiltrated every corner of audio production, fundamentally reshaping how sound designers approach their craft. What began as simple pattern recognition has evolved into sophisticated systems capable of generating entirely new audio content, analyzing complex soundscapes, and even making creative decisions that once required years of professional experience to develop.

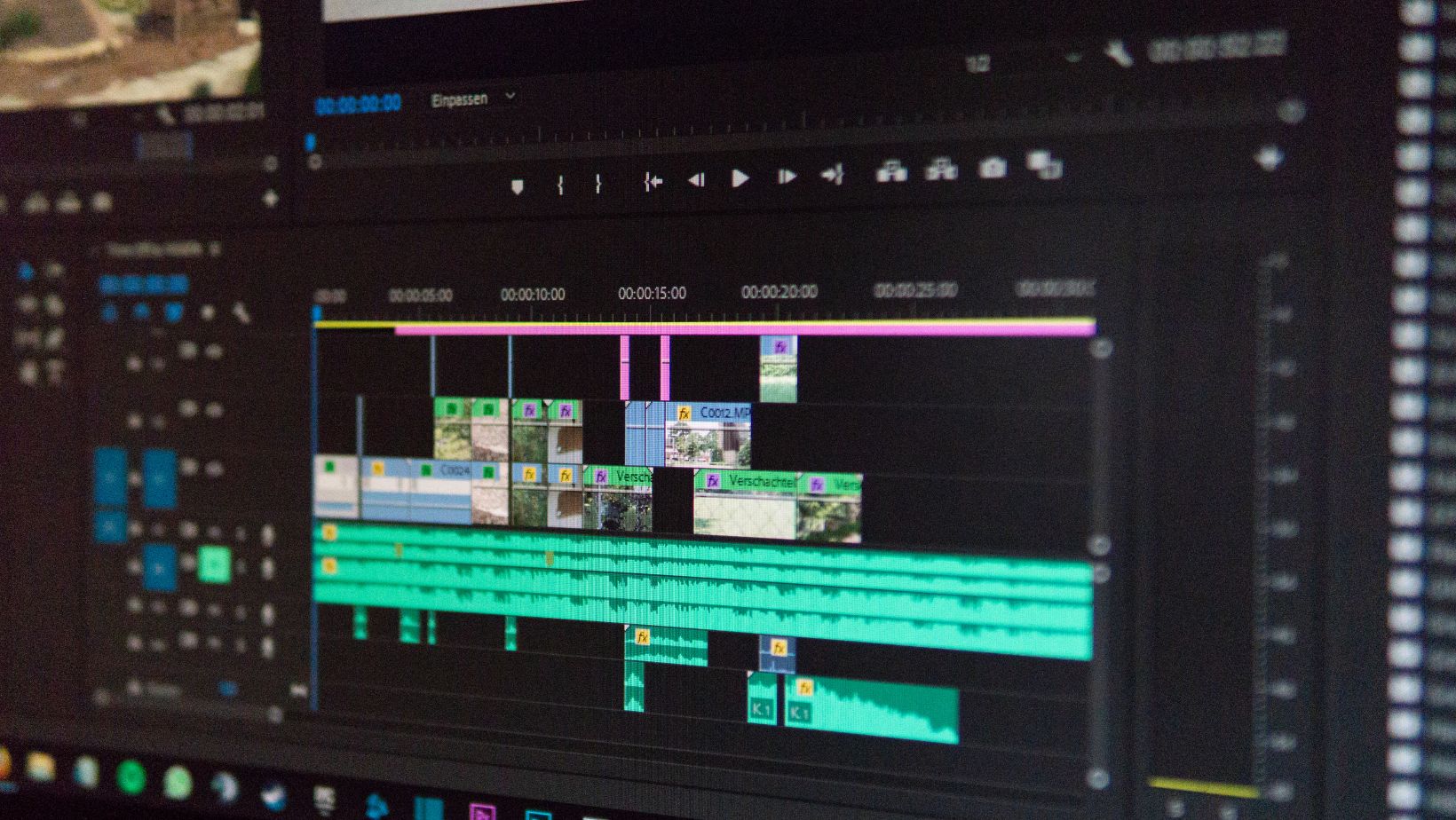

The transformation isn’t happening in isolation – it’s emerging from the intersection of computational power, vast audio datasets, and increasingly sophisticated neural network architectures. Unlike traditional algorithmic approaches that followed rigid rules, machine learning systems learn from examples, developing their own internal logic for understanding and manipulating audio content. This shift represents perhaps the most significant change in sound design methodology since the transition from analog to digital workflows.

Generative Audio Models: Creating Something from Nothing

Contemporary generative audio models operate on principles that would have seemed like science fiction just a decade ago. These systems analyze massive collections of audio content, learning the statistical relationships between different frequencies, timing patterns, and spectral characteristics. Once trained, they can generate entirely new audio content that maintains the stylistic characteristics of their training data while creating novel combinations never heard before.

The most advanced systems work in the frequency domain, understanding audio not just as waveforms but as complex spectral relationships that evolve over time. This approach allows them to generate sounds that maintain musical and acoustic coherence while introducing creative variations that human designers might never consider. The results often surprise even experienced sound designers with unexpected combinations that somehow feel both familiar and refreshingly new.

WaveNet and similar architectures have demonstrated remarkable capability in generating realistic human speech, but their applications extend far beyond vocal synthesis. These same principles apply to generating ambient textures, creating variations of existing sound effects, or even composing entirely synthetic soundscapes that evolve organically over extended periods.

Intelligent Sound Analysis and Categorization

Machine learning excels at pattern recognition tasks that would be impossibly time-consuming for humans to perform manually. Advanced audio analysis systems can examine vast sound libraries, automatically identifying similarities, categorizing content by mood or intensity, and even detecting subtle acoustic characteristics that correlate with emotional responses.

These analysis capabilities transform how sound designers interact with their libraries. Instead of manually browsing through thousands of files, intelligent systems can instantly locate sounds with specific characteristics – perhaps finding all the recordings with a particular type of room reverb, or identifying sounds that share harmonic content with a reference track. This dramatically accelerates the sound selection process while revealing connections between sounds that might not be immediately obvious.

Some systems go further, analyzing the temporal evolution of sounds to understand their dramatic arc. They can identify which sounds build tension, which provide resolution, and which maintain steady-state energy. This temporal analysis becomes particularly valuable when designing complex sequences where individual elements must work together to create specific emotional trajectories.

Adaptive Processing and Intelligent Effects

Traditional audio processing relies on static parameter settings that remain constant throughout a sound’s duration. Machine learning enables adaptive processing that responds intelligently to the changing characteristics of audio content in real-time. Compressors can adjust their attack and release times based on the musical content, reverbs can modify their characteristics to complement the harmonic structure of incoming audio, and EQs can make subtle adjustments to maintain tonal balance as frequency content evolves.

These adaptive systems learn from analyzing how experienced engineers typically adjust their processing in response to different types of content. Rather than replacing human decision-making, they provide a starting point that reflects professional practices, which designers can then refine according to their specific creative vision.

The most sophisticated adaptive processors consider not just the immediate audio content but also the broader context within a project. They understand how individual sounds relate to the overall mix, automatically adjusting processing to maintain clarity and impact while preserving the designer’s artistic intent.

Predictive Workflow Assistance

Machine learning systems are beginning to anticipate designer needs based on project context and historical patterns. These systems analyze past projects to understand typical workflow patterns, suggesting appropriate sounds based on the current project’s characteristics, genre, and emotional requirements. When working on an action sequence, the system might proactively suggest appropriate bullet sound effects and explosion variants before the designer even begins searching.

This predictive assistance extends to technical decisions as well. Systems can recommend optimal EQ curves based on the acoustic characteristics of existing project elements, suggest appropriate reverb settings that match the established spatial aesthetic, or identify potential masking issues before they become problematic.

Collaborative Intelligence: Human-Machine Creative Partnerships

The most exciting developments emerge when machine learning augments rather than replaces human creativity. Intelligent systems can generate multiple variations of a sound designer’s initial concept, exploring creative territories that the human might not have considered while maintaining consistency with the overall artistic vision.

These collaborative workflows often involve iterative refinement, where designers provide feedback that helps machine learning systems better understand their creative preferences. Over time, the systems develop personalized models that reflect individual artistic sensibilities while still offering surprising creative suggestions.

Real-Time Performance and Interactive Applications

Machine learning enables real-time sound generation and manipulation that responds intelligently to changing parameters. In interactive media like video games, these systems can generate appropriate audio responses to complex combinations of environmental factors, player actions, and narrative context. Rather than playing back pre-recorded samples, they synthesize contextually appropriate audio that feels organic and responsive.

Challenges and Creative Considerations

Despite their capabilities, current machine learning systems face significant limitations. They struggle with understanding broader creative context beyond their training data, and their outputs often require human curation to maintain artistic coherence. The quality of generated content depends heavily on training data quality, and systems can perpetuate biases present in their source material.

The most successful implementations recognize these limitations while leveraging machine learning’s strengths in pattern recognition, variation generation, and workflow optimization. Rather than seeking to automate creativity entirely, they focus on enhancing human capabilities and accelerating tedious technical tasks.

The future promises even more sophisticated integration between human creativity and machine intelligence, opening creative possibilities that neither could achieve alone.